AI Hallucination: The Viral AI Overviews Pizza Glue Fiasco Explained

- mohammed jarekji

- Oct 24, 2025

- 4 min read

When Search Went Off the Rails

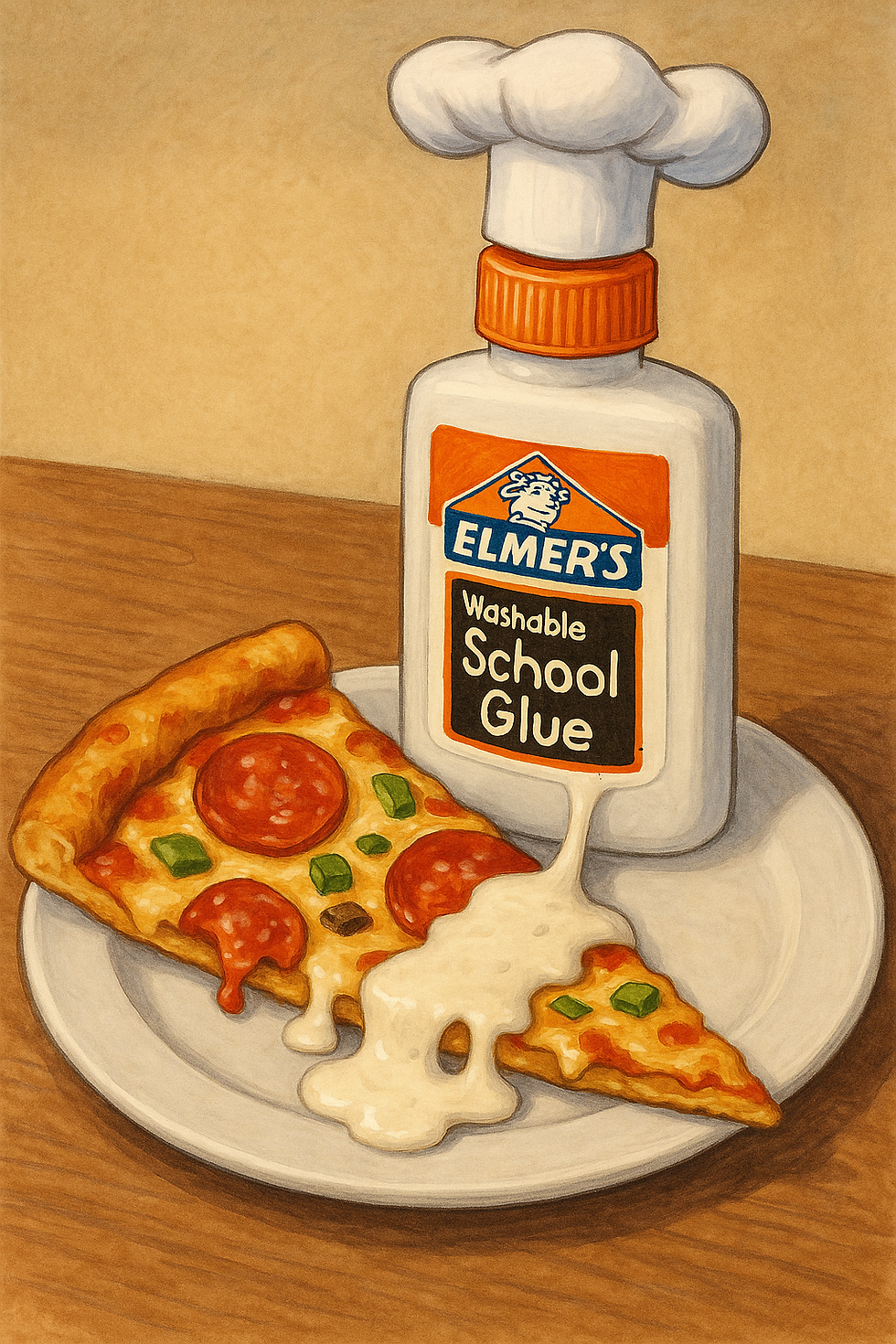

Imagine you’re searching “cheese not sticking to pizza” and the top result from Google’s AI says to add ⅛ cup of non-toxic glue to the sauce to make the cheese stick. That’s exactly what happened early in the rollout of Google’s AI Overviews, and the incident went viral.

It may sound funny but it points to a deeper problem: generative AI in search may be fast and flashy but also fragile.

What Exactly Happened?

In May 2024, Google’s AI Overviews feature surfaced on many search queries. Among many bizarre outputs were:

A suggestion to add glue to pizza sauce so cheese would stick.

Advice to eat rocks daily for minerals.

Other false claims, from fake idioms to absurd health or mechanical advice.

Investigations traced the glue-advice back to an 11-year-old Reddit joke, which the system failed to identify as satire. The chaos generated media headlines and triggered a scrutiny of how these generative overviews are constructed and validated.

Why Did The AI Overviews Pizza Glue Fiasco Happen - The Technical & Semantic Breakdown

The Mechanics

AI Overviews rely on large-language models (LLMs) plus entity databases like the Knowledge Graph. They retrieve multiple sources, summarize them, and present the result atop search results.

The Failure

What went wrong in the AI Overviews pizza glue fiasco case?

The system treated a joke or troll forum comment as real advice.

The filtering mechanisms for satire, user forums, and low-credibility content were insufficient.

Generative systems can produce confident-looking but incorrect statements (hallucinations) when underlying data is unreliable.

The Wider Problem

When the AI system can’t distinguish between correct information and memes or jokes, users risk being misled, and Google risks its credibility.

The Repercussions - For Google, Publishers & SEO

For Google

Public trust took a hit. Headlines about glue on pizza made people question the reliability of search. Google responded by scaling back some deployments and adding filters.

For Publishers & SEO

Traffic & click-throughs may decline if AI Overviews satisfy queries before users click publisher websites. Content creators now face the dual challenge of:

Staying visible when search results summarize before clicks,

Ensuring their work is a credible source for generative systems.

For Users

When advice is actually junk, real harm is possible, especially in health, safety or technical domains. The glue‐pizza moment was comedic, but it revealed serious risk.

What Google Did (and Is Doing) About It

Google admitted the problems, including the glue and rock suggestions as part of early rollout quirks.

Actions taken:

Reduced how often AI Overviews appear for certain queries.

Improved detection of satire, jokey forums, and user‐generated content that may not be factual.

Made public commitment to refining the systems and improving quality. Still, critics point out the problems aren’t fully solved.

Lessons for SEOs & Content Creators

1. Be the credible source

Focus on authoritative content with verified facts. Generative AI systems may pull from you if you’re a reliable entity.

2. Use structured data

Schema markup helps search engines understand your entity and content context, which is important for generative overviews.

3. Monitor how your content is surfaced

Check if your content appears in summary boxes or is being referenced, or ignored.

4. Prioritize accuracy and trust

Don’t produce content optimized for quick hits; aim for depth, transparency, and the kind of credibility that no AI mistake can undermine.

5. Adapt to the shift

As the search experience evolves (see articles on entity SEO, Knowledge Graph and AI Overviews), your strategy must also evolve to serve both users and AI systems.

Key Takeaways

The pizza glue fiasco is more than a meme. It’s a wake-up call for search AI and SEO.

For Google: generative search must balance innovation with reliability.

For content creators: your role shifts from ranking pages to being the trusted entity behind answers.

For users: always verify information, even when it pops up first.

FAQs

Why do AI systems like Google’s Overviews make confident but false claims?

AI language models are trained to predict the most likely next word based on patterns in text, not to verify factual accuracy.

When the training data contains jokes, sarcasm, or misinformation, the model may reproduce them as if they were facts, especially when phrased with high confidence. This phenomenon is known as AI hallucination.

Can Google fully eliminate errors like the pizza glue suggestion?

Not entirely. While Google continues improving filters, source ranking, and model alignment, no generative system can guarantee 100% factual accuracy.

Errors can still slip through when topics are ambiguous, niche, or poorly represented in training data. Google’s challenge is balancing creativity and recall with factual precision.

How does Google’s AI decide which sources to trust?

The system combines multiple signals:

Structured data and schema markup

Authority metrics like backlinks and EEAT

Entity consistency across platforms (e.g., Wikipedia, Wikidata, Google Business Profile)

It also cross-references the Knowledge Graph to verify entity relationships. However, even with these safeguards, community-driven sites like Reddit can introduce noise.

Are AI Overviews less reliable for certain topics?

Yes. AI Overviews perform best on topics with high factual density (science, geography, product specs) and worse on subjective or conversational topics (lifestyle, humor, health advice).The more open-ended the query, the greater the risk that the model will generate partially or completely inaccurate answers.

How can users and SEOs help improve AI accuracy?

By publishing clear, well-sourced, and factual content with structured data.

Google’s models learn indirectly from the quality of web content they summarize. So accurate, consistent, and transparent publishing practices raise the reliability of AI-generated results across the web.

Comments